|

I received my M.S. in Computer Science from Columbia University. My research interest is in multimodal learning, efficient machine learning, and applications of deep learning. |

|

|

|

Yizhen Luo, Xing Yi Liu, Kai Yang, Kui Huang, Massimo Hong, Jiahuan Zhang, Yushuai Wu, Zaiqing Nie Health Data Science 2024 arXiv | Code Human pharmaceutical experts draw insight from both structured knowledge in knowledge bases and unstructured knowledge in biomedical literature. Our proposal, KEDD, extracts knowledge similarly and solves a variety of drug discovery tasks in a unified framework. |

|

Xing Yi Liu, Homayoon Beigi IMCOM 2024 arXiv | Code | Slides EfficientPunct outperforms the previous best punctuation restoration model by 1.0 F1 points, using less than a tenth of its parameters to process embeddings. We create an ensemble streamlined from a speech recognizer to extract audio embeddings. |

|

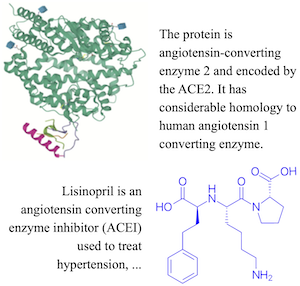

Yizhen Luo, Kai Yang, Massimo Hong, Xing Yi Liu, Zaiqing Nie arXiv 2023 arXiv | Code We introduce MolFM, a multimodal molecular foundation model designed to facilitate joint representation learning from molecular structures, biomedical texts, and knowledge graphs. We propose cross-modal attention to facilitate comprehension between these modalities. |

|

Anqi Cui, Guangyu Feng, Borui Ye, Kun Xiong, Xing Yi Liu, Ming Li NTCIR-12 2016 Our submission to the NTCIR-12 task treats short text conversation as a community question-answering problem. We achieved performances of mean nDCG@1 0.2767, mean P+ 0.4284 and mean nERR@10 0.4095. |

|

Thank you to Jon Barron for this website's template. |